Hollywood Superstitions vs. Data Science: Investigating for 99PI

27 Mar 2020 —Where do we start the tale from?

Science is all about asking the right questions, yada yada, something like that. But normally the sorts of questions I ask require ages of preparation, multiple failed experiments, and oodles of tedious computer analysis. However, this time it was going to be different. We had a simple question, easy data, and a straight-forward approach. A simple job, in and out, right?

Our question was about questions—questions, question marks, the film industry, and making money.

Is Anyone Listening—A Podcast Story

My twin brother and I have a deep and abiding love for educational podcasts.1 One of my favorites—one that highlights my secret passion for urban planning and design—is 99 Percent Invisible. 99 Percent Invisible (often referred to as 99PI) is hosted by Roman Mars, and other than being a thinly veiled advertisement for the city of “beautiful downtown Oakland, California,” it is a podcast about the ninety-nine percent of design, architecture, and life that goes by unseen and unnoticed.

For example, on February 11th, 99PI released an episode about the song “Who Let the Dogs Out” by the Baha Men. If you thought a catchy hook and repetitive lyrics were all this song had to offer, you’re in for an exceptionally riveting episode. I’m not joking, it is absolutely surprising how deep the rabbit-hole goes on that one. But in pursuing the “white whale”—the inscrutable question of WHO actually let the dogs out—song expert Ben Sisto casually notes that the song’s title, although a question, lacks a question mark.

Have You Seen My Movie?

In the 99PI episode, this observation kick-starts a conversation between 99PI producer Chris Berube and movie producer Liz Watson. They realize that plenty of movies whose titles are phrased as questions actually lack the correct punctuation. Take for instance the movie, Who Frame Roger Rabbit. Apparently, the director of the movie ditched the question mark because, as Liz explains, “there is a superstition in Hollywood that if you put a question mark at the end of your title, the movie will bomb at the box office.” While mulling this over, Chris and Liz point out that movies like Who’s Afraid Of Virginia Woolf? and They Shoot Horses, Don’t They? were successes, even in the face of the dreaded question-marked title.

In the end, such worries are chalked up as the trivial preoccupations of an irrational movie industry. As Liz says:

“You can’t fully predict how people are going to act. […] I mean, these kinds of superstitions are just trying to put lightning in a bottle and trying to find any kind of rhyme or reason to what is ultimately such a multi-variant and shifting public mood that will put or not put money in your pocket, that you’ll latch on to stuff like question marks in the titles, which is the equivalent of wearing the same pair of shorts for every NCAA finals game you play in.”

Their take-home message for this human-interest story is that industry behavior is guided by the untestable, and that, “ultimately, there’s kind of no answer to the mystery except to say that all creativity and art is a mystery.”

[Cue an electric guitar riff that pierces the silence and hangs in the air. Jump cut to a close shot of me, revving a chainsaw and then shredding a sign that reads ‘Humanities’ or ‘Mystery’ or ‘Art’ on it, MythBusters style. Maybe I look up into the camera and say something badass/pithy like, “Let’s DO this!” or “I didn’t get a science degree just to deal with this bullshit!”]

Can We Do It Ourselves?

I don’t have the tools to solve many problems, but I felt that THIS was one of few mysteries I could address. However, we need to be clear on what exactly our questions are.

Chris Berube and Liz Watson bring up several predictions in this episode:

- Question marks at the end of movie titles will cause them to “bomb.”

- Question marks are more commonly used in certain movie genres.

- Question marks work better for comedies than for dramas.

Zach and I thought about it, and we concluded that these predictions would be a piece of cake to test. I mean, isn’t that what something like the Internet Movie Database is for? We just grab the movie database with titles, genres, and degrees of success from IMDB and then compare the titles with and without question marks. A simple job, in and out, easy peasy.

And if we make the assumption that online IMDB user ratings (on a 1-10 scale) are a trustworthy indicator of movie success,2 then this data really is just sitting around, free to access.

Yet there’s one component still missing: not all movie titles are interrogatives. It doesn’t make sense to compare All Babes Want to Kill Me with Who Killed Cock Robin?; we are only interested in titles where including a question mark would be appropriate. We need to find the subset of titles that are phrased as questions.

What is Acceptable Language/Heroes

Well. It turns out that it is not easy to make a computer automatically determine whether a phrase is a question or not. Even for us humans, it’s not always clear. I hate to throw Chris under the bus here, but in the 99PI episode, he claimed that Guess Who’s Coming To Dinner constitutes a question… and it’s not. Although the sentence clearly begs for an answer, it’s technically phrased as a command instead. And consider titles like How far the stars. What even IS that? A noun phrase? A half-formed query? Let’s not forget my absolute favorite title, What What (In the Butt): The Movie. Additionally, titles like What Goes Up are probably not intended as interrogatives, but they remain grammatically indistinguishable from questions. And what about foreign language titles like Did Livogo Kraynogo and May lalaki sa ilalim ng kama ko? Clearly, some amount of syntactic analysis is required.

You might erroneously think that having a twin brother with a (soon-to-be-acquired) doctorate in linguistics would be very helpful here. Boy, I know I certainly started out with that hope.

Ahem. Anyway, we figured that because most grammatical questions follow certain syntactic structures, we could find our movies by breaking the titles up into individual words and investigating their order and parts of speech (i.e., verbs, nouns, adjectives, etc.). To make a very long story short, I learned how to use the natural language processing R package openNLP to tag parts of speech, and my brother filtered our massive database with his access to a large computing cluster. (Check his description of the nitty-gritty details for more info.)

Is That the Question?

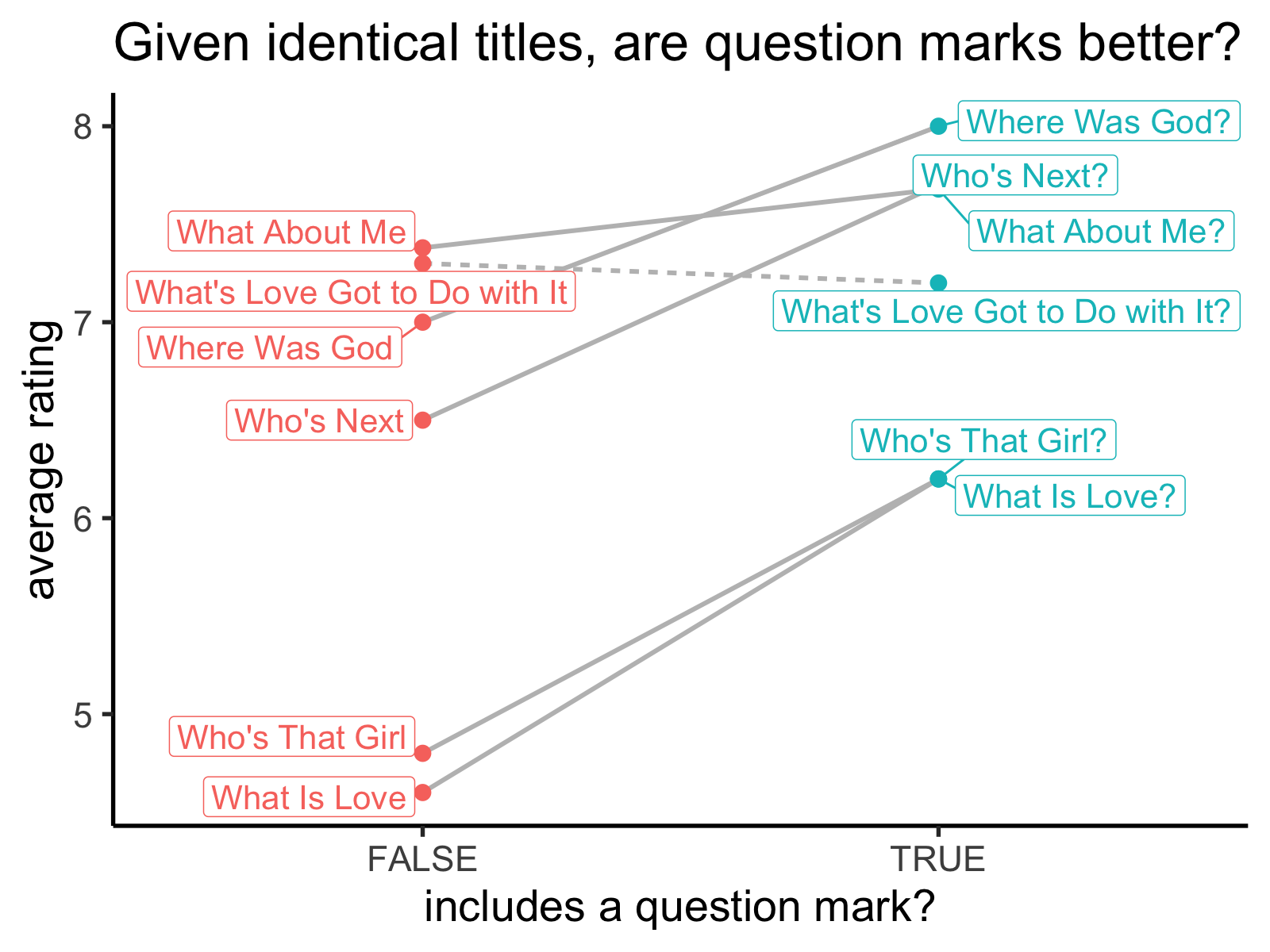

Okay! With refined, polished dataset in tow, Zach suggested we start by testing an incredibly stringent, very literal interpretation of the first prediction just for fun. Do movies without question marks rate better? There were six pairs3 of movies with identical titles, differing by only the inclusion or exclusion of a question mark (e.g. Who’s That Girl? and Who’s That Girl, What Is Love? and What Is Love, etc.) When we look at the data, there is clearly a pattern: other than the unfortunate What’s Love Got to Do with It?, each question-marked version has a higher rating than its counterpart.

When we compare paired movie titles, the counterparts with question marks get better ratings! (Except for the one pair marked by a dashed line.)

Because this data is fairly normal (i.e., has the bell-shaped curve that is helpful for many analyses), we can run a paired t-test to see if there is a true, meaningful difference between them:

# Are titles with question marks differently rated than those without? Yes

t.test(averageRating ~ has_mark, paired=T, data=paired_df_plot) Paired t-test

data: averageRating by has_mark

t = -3.3236, df = 5, p-value = 0.02092

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-1.5961144 -0.2039208

sample estimates:

mean of the differences

-0.9000176 Even with using only a handful of films, we find this difference statistically significant. Perhaps question marks actually help movies do better!

However, there is a much bigger pool of movies waiting to be analyzed; very few of our films have a paired counterpart. To deal with this large and ungainly dataset,4 we can whip out my favorite statistical sledgehammer, ye olde Kruskal-Wallis rank sum test,5 and BAM! the answer seems to be a clear and resounding “no.” (The large p-value below indicates a non-significant difference.)

However, when we compare ALL the movies with interrogative titles, we find no difference between those with a question mark and those without. The plot is interactive, so try hovering your mouse over the points to see if you recognize any of the titles.

# Are titles with question marks differently rated than those without? No

kruskal.test(averageRating ~ has_mark, data = zach_df) Kruskal-Wallis rank sum test

data: averageRating by has_mark

Kruskal-Wallis chi-squared = 0.31375, df = 1, p-value = 0.5754Although this result goes against the findings of the previous test,6 the vastly increased sample size lends it much more credence. As Chris and Liz espouse in the podcast, the data suggests that you really can take or leave this form of punctuation with very little effect.

What Lies Beneath

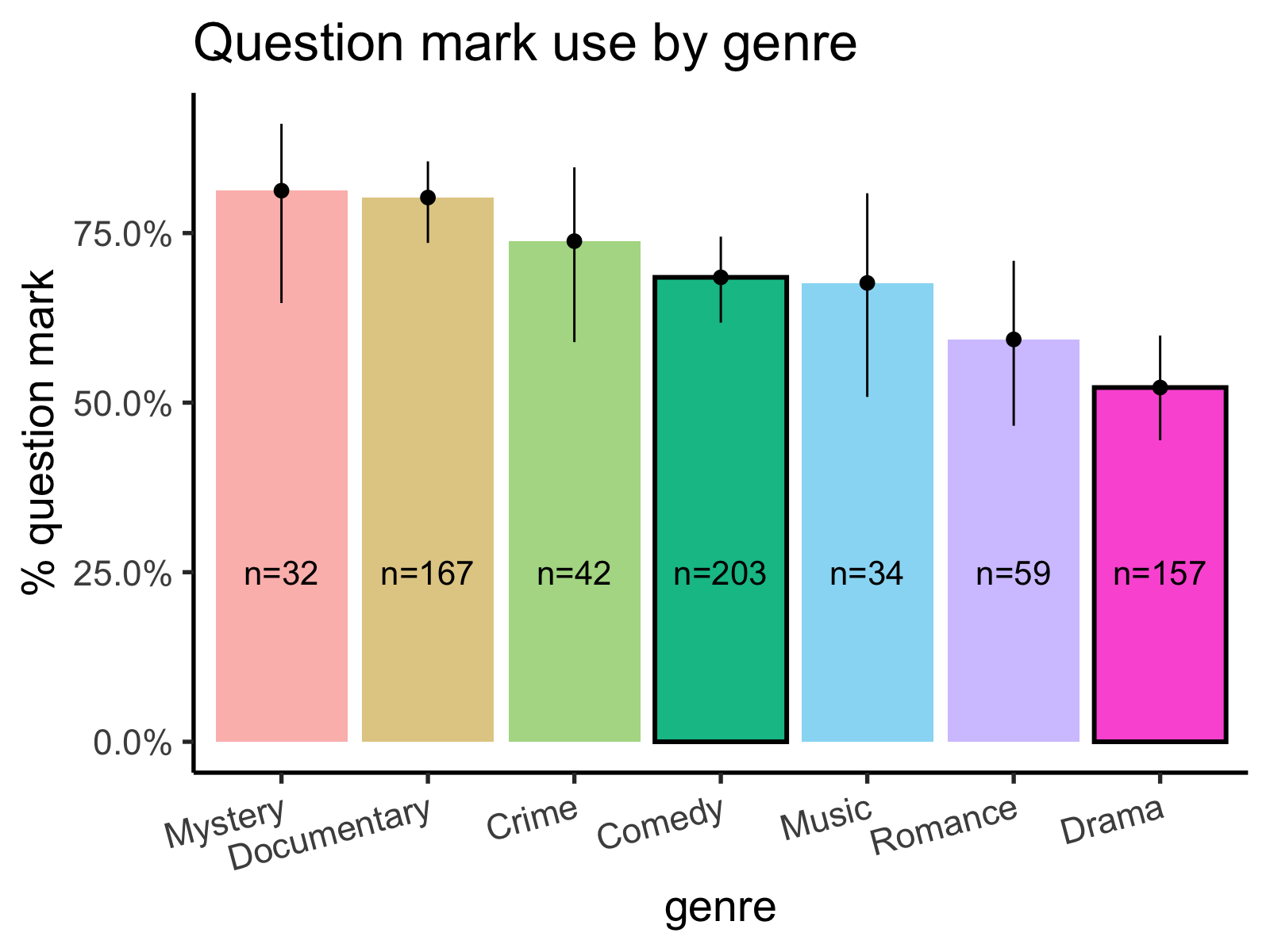

But maybe we should get more granular—maybe there’s still something going on beneath the surface. After all, Chris and Liz seem to think different genres might make different use of punctuation. Looking at the data, this claim definitely holds.

Comedies do use question marks at a higher proportion than dramas. Error bars represent 95% confidence intervals.

Comedies use question marks significantly more often than dramas do (p-value = 6.17 × 10-4 in a chi-squared test). Additionally, it’s not surprising that mystery, documentary, and crime films are the genres that most frequently employ question marks. After all, the whole premise of these movies is to reveal the answer to some sort of investigation.

What’s So Damn Funny!

Perhaps, as our podcasting pair posit, “[a question mark] makes you feel kind of cheerful and goofy. You’re waiting for the punchline, you’re waiting for the shoe to drop. It’s like being told the first half of a joke.” If this is the case, we would probably expect that question marks hurt ratings for dramas but give comedies a boost.

We can restrict our dataset to only comedies and dramas and use a linear model to test whether the specific genre and “question-markedness” interact to affect ratings. That is, does including a question mark affect the movie’s rating differently depending which genre the movie is?

Focus on the left half of this graph: we see that comedies do BETTER with question marks and dramas do sliiiightly worse. I didn't have any hypotheses about movies that were neither (or both) of the two genres, but I included them on the right for completeness. Remember, this graph is interactive too!

Below, we included a little summary of our little linear model. The important part is that little asterisk to the far right of our “interaction” line.

# Is the effect of a question mark modulated by comedy vs. drama? Yes

zach_df %>% filter(genre %in% c("comedy","drama")) %>%

lm(averageRating ~ has_mark * genre, data = .) %>%

make_pretty_table() term estimate std.error p-value

intercept 5.602 0.182 <2e-16 ***

q-mark effect 0.520 0.215 0.016 *

genre effect 0.751 0.245 0.002 **

interaction -0.637 0.314 0.044 * And according to our model,7 there IS a significant interaction! When the title of a movie is a question, comedies tend to do better when they include a question mark, and dramas fare slightly worse when they include it. (It should be noted that this interaction term has a p-value of 0.0436, which is just barely under the common α = 0.05 significance threshold. But if you’re more of an AIC model comparison sort of person, this full interaction model also has a ∆AIC of 2.0124 compared to the next best model.8)

Do You Trust This Computer?

Listen, this methodology isn’t perfect. If you look through our dataset, you might find one or two movie titles that our algorithm misidentified as questions. There are certainly movies that it failed to spot: They Shoot Horses, Don’t They? and O Brother, Where Art Thou? are two that our algorithm missed, even though Liz and Chris mentioned them in the episode, and a few that probably should have been screened out, like Do or Die. Perhaps the method our algorithm used to determine “question-ness” led to a biased subsample of movies; maybe those hard-to-detect titles happen to be quantitatively different from the ones we selected.

But here’s an idea: if a movie has a question mark in its title, we can be almost 100% confident that the title is a question, even if it’s an ungrammatical, weird question. (I’m looking at you, What Price Innocence?) We can easily gather up these titles and have the grand list—the whole population, the closest thing to ground truth—for one of the two treatments in our dataset. If our methods have a low bias, when we look at our sample of question-marked titles, they should be representative of this grand, complete list of question-marked titles. It’s a sanity check: does our refined subset of data reflect the whole?

Our results seem valid. At least we couldn't detect a bias between the subset of titles we used and the totality of titles that include question marks. Mmm! Those thicc curves though.

Check it. Although my eye wants to find slight differences between the two distributions, ma boi Krusky W tells me they aren’t significant (p-value = 0.254). Perrrrfect.

Who’s Afraid of Happy Endings?

Well there it is. Overall, Chris and Liz’s intuition seemed to be quite accurate. Across all genres, the superstition that question marks affect success is just that: a baseless superstition. The pair were also justified in their claim that comedies seem to use question marks in their titles more frequently than dramas. When a title is formed as a question and listed as a comedy, ~68% of the time it’ll include the proper punctuation, while only ~52% of dramas do so. Lastly—and this is their most impressive pull as far as I’m concerned—Liz speculates that question marks can set up a mood of cheerful goofiness in comedies that doesn’t work for dramas. In our model, this bears out: comedies score better ratings when they have a question mark and dramas do slightly worse.

As grad students, Zach and I are quite used to wasting long periods of time to only partially solve mysteries that no one is particularly interested in. This is probably why I’m drawn to projects like 99PI that double down to investigate the overlooked facets of life, like the bizarre history of an (ostensibly) early 2000s pop song or an examination of vestigial architecture that endures against the odds. And if you’re reading this, chances are that you feel the same way. Maybe you have questions about the weight you lose in your sleep or how scientists paint tiny nametags on living ants. Most likely, you didn’t, but if you do now, maybe you should stick around and check out some of our other stuff.

Source Code:

This is the code Zach ran on his compute cluster to do part-of-speech tagging on the movie titles. The output of this code is needed for the next script.

This is the code we used to separate the movie titles that we defined as questions from the others. Zach wrote a little more about the process here.

If you want to see the source code for any of the cool, interactive plotly graphs or anything else from this post, you can check out the source code above.

Footnotes:

-

As with all progressive, pseudo-intellectual millennials ↩

-

We would have liked to measure success with box office revenue, but good financial data is hard to come by and IMDB is stingy with the info they dole out for free. Womp womp. ↩

-

Well, more like 6.5 pairs: there were two movies entitled What About Me? and two movies entitled What About Me. So this was a weird quad-pairing? The quest for self-knowledge must be a popular movie plot. ↩

-

Using a Shapiro-Wilk normality test, we find that the data is definitely non-normal (p-value = 3.98 × 10-5). ↩

-

The Kruskal-Wallis test is an extension of the Mann–Whitney U test, in that it can handle more than two different groups. We’re only testing the difference between two groups here, but it still works. The test should be in every behavioral ecologist’s toolkit because it requires very, very few assumptions. ↩

-

Note that none of our results ever support the hypothesis that question marks will harm a movie’s rating though, the presumed Hollywood superstition. ↩

-

You might be wondering why having a question mark seems to be a significant main effect here, while in the previous test we found that not to be true. Don’t worry, this is just because we subsetted the data for this model into only comedies and dramas. When we include all the other genres, this effect goes away. The full model suggests that the main effect we see in the reduced model is mostly driven by comedies, which do really seem to be affected by question marks. ↩

-

But to be fair, this is also just above the common rule-of-thumb that says “a ∆AIC > 2 implies a clear choice.” ↩